Table of Contents

Each lab corresponds to a module covered in the workshop and provides you with hands-on experience

working with the NGINX core software.

Pre-requisites

- web server

- Linux

- vim , nano for file editing.

- basic networking as need to allow some TCP port in ingress

Note – this workshop can be performed on local system (Laptop with linux based OS)

Lab 1: Getting Started with NGINX

login to the server and install nginx

for Amazon EC2/ Centos

sudo yum install nginx -yfor Ubuntu / Debian

sudo apt install nginx -ystart the Nginx service if stop and enable the nginx service

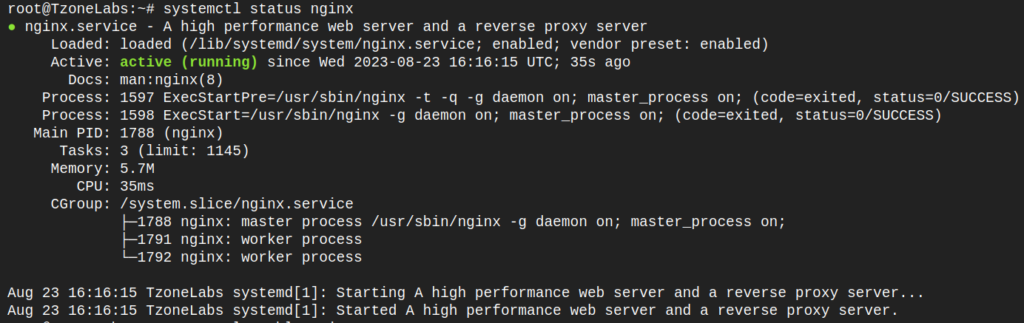

sudo systemctl status nginx

sudo systemctl start nginx

sudo systemctl enable nginx

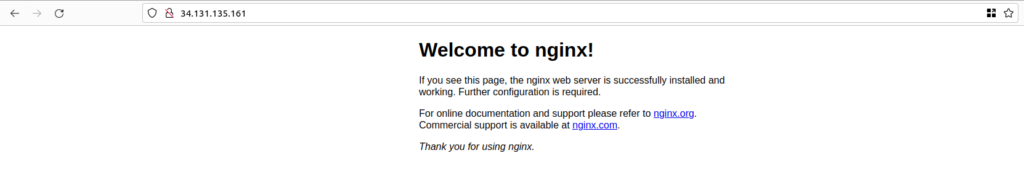

access nginx webpage

Now you have successfully installed nginx. So, to access nginx default page please go to your browser and type http://<your_system_public_ip>/ .

This file is accessed from the root location (/usr/share/nginx/html/index.html) which is defined in the default nginx conf file under /etc/nginx/conf.d.

Working with Configuration Files

change the directory here you will see the default conf file.

cd /etc/nginx/conf.dBack up the default.conf file

sudo mv default.conf default.conf.bakUse the vim editor to create a new configuration file called my_server.conf

sudo vim my_server.confType “i” to enter insert mode

Add the following to your file:

server {

listen 80;

root /var/my_files/custom_html;

}Save the file:

Press the ESC key to exit editing mode, then type:wq and hit enter to save and exit the file with changes (write, quit).

Note: To save and exit a file without changes, use the esc key, then:q!

As the above directory is not present /var/my_files/custom_html so will make this directory first

mkdir -p /var/my_files/custom_htmlgo to that directory and create an index.html file

cd /var/my_files/custom_html

vim index.htmland paste below data in index.html

You can also modify the content of index.html file, i am using the below content.

<!DOCTYPE html>

<html>

<head>

<title>TzoneLabs</title>

<style>

body {

font-family: Arial, sans-serif;

background-color: #f2f2f2;

margin: 0;

padding: 0;

display: flex;

justify-content: center;

align-items: center;

height: 100vh;

}

.container {

text-align: center;

padding: 20px;

background-color: #ffffff;

border-radius: 10px;

box-shadow: 0 0 10px rgba(0, 0, 0, 0.2);

}

h1 {

color: #333333;

}

p {

color: #666666;

}

</style>

</head>

<body>

<div class="container">

<h1>Welcome to TzoneLabs</h1>

<p>Our blog is your one-stop destination for insightful and in-depth articles on a wide range of topics, spanning the realms of AWS, DevOps, Ansible, and an array of powerful open-source tools.</p>

</div>

</body>

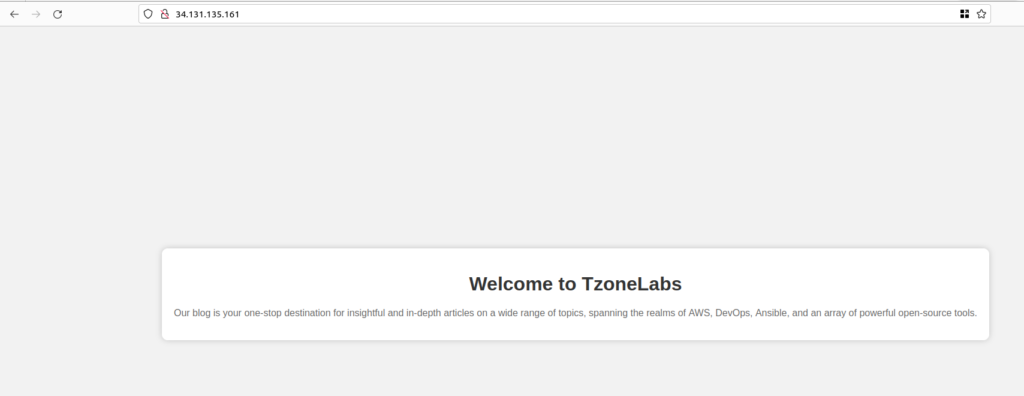

</html>and verify nginx configuration using nginx -t and reload

sudo nginx -t

sudo systemctl reload nginx

or

sudo nginx -s reloadthen again access webpage from the browser

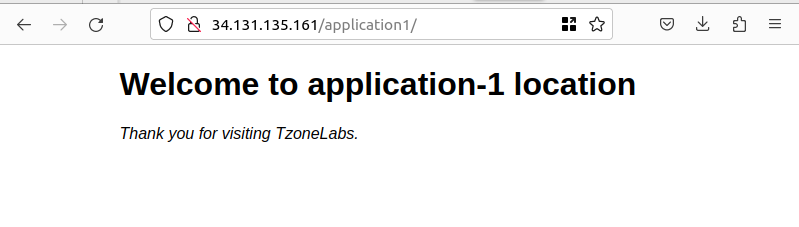

Lab2: Serving Content: Location

Edit the /etc/nginx/conf.d/my_server.conf file and add code to create 2 locations. as below

server {

listen 80;

root /var/my_files/custom_html;

location /application1 {

}

location /application2 {

}

}as these locations are not present in the root location(/var/my_files/custom_html) so first create these locations

sudo mkdir -p /var/my_files/custom_html/application1

sudo mkdir -p /var/my_files/custom_html/application2and then reload nginx

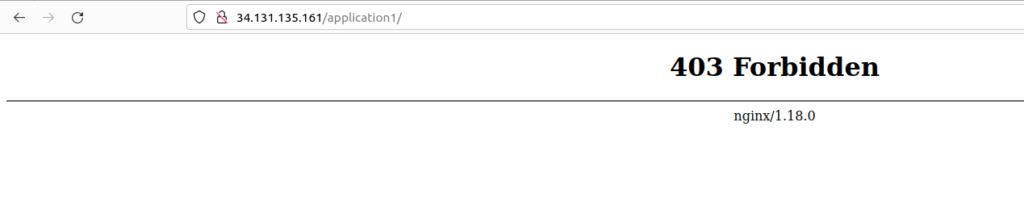

sudo nginx -s reloadand access your nginx web server with the application1 or application2 location http://<your_server_ip>/application1

Why do you think you received a “403 Forbidden” on the application URLs?

Note there is no index.html file, which NGINX looks for by default

Note: by default, nginx looks for index.html if you want to use another HTML file as index file we have to add a directive in nginx conf. we will use the same in below.

Create app1.html file in /var/my_files/custom_html/application1 directory

vim /var/my_files/custom_html/application1/app1.htmlpaste the below content and save file and exit.

<!DOCTYPE html>

<html>

<head>

<title>application1</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to application-1 location </h1>

<p><em>Thank you for visiting TzoneLabs.</em></p>

</body>

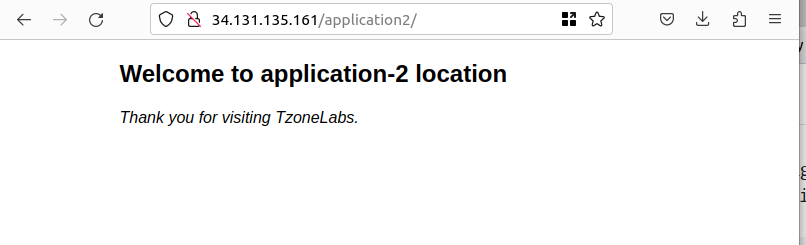

</html>same create app2.html file under /var/my_files/custom_html/application2 directory

vim /var/my_files/custom_html/application1/app2.htmlpaste below content and save file and exit.

<!DOCTYPE html>

<html>

<head>

<title>application2</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h2>Welcome to application-2 location </h2>

<p><em>Thank you for visiting TzoneLabs.</em></p>

</body>

</html>now modify /etc/nginx/conf.d/my_server.conf file to call above html files

server {

listen 80;

root /var/my_files/custom_html;

location /application1 {

index app1.html; ## added this

}

location /application2 {

index app2.html; ## added this

}

}Now reload nginx

sudo nginx -s reloadand access the nginx location in the browser using http://<your_server_ip>/application1 and http://<your_server_ip>/application2

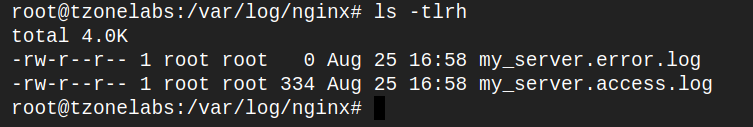

Lab3: Logging

Setting up Logging

Open your my_server.conf file and update it with the following changes.

Add an error log directive in the server context with a level of info. Add

an access log directive in the server context with a type/name of

“combined”.

server {

error_log /var/log/nginx/my_server.error.log info;

access_log /var/log/nginx/my_server.access.log combined;

listen 80;

root /var/my_files/custom_html;

location /application1 {

index app1.html; ## added this

}

location /application2 {

index app2.html; ## added this

}

}Save the file and reload nginx.

sudo nginx -s reloadand access the nginx location in the browser using http://<your_server_ip>/application1 and http://<your_server_ip>/application2

Look at the access log using the following command

cat /var/log/nginx/my_server.access.log

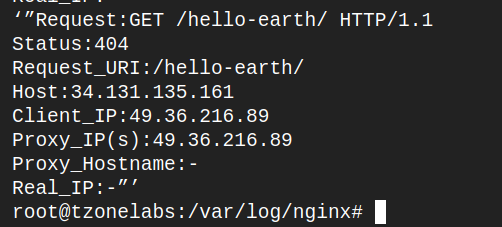

Create a Custom Log

Add the following log_format command to your my_server.conf file in the http context (i.e. at the top of the file).

log_format custom_log ‘”Request: $request\n Status: $status\n

Request_URI: $request_uri\n Host: $host\n Client_IP:

$remote_addr\n Proxy_IP(s): $proxy_add_x_forwarded_for\n

Proxy_Hostname: $proxy_host\n Real_IP: $http_x_real_ip”’;

server {

error_log /var/log/nginx/my_server.error.log info;

access_log /var/log/nginx/my_server.access.log custom_log;

listen 80;

root /var/my_files/custom_html;

location /application1 {

index app1.html;

}

location /application2 {

index app2.html;

}

}Be sure to change the “combined” access log type to match the log format command (custom_log).

Save the file and reload nginx and access the nginx location in the browser using http://<your_server_ip>/application1 or http://<your_server_ip>/application2

Look at the access log using the following command:

cat /var/log/nginx/server1.access.log

You should see that the logfile is now providing one variable per line with a descriptive indicator before each.

Map Directive with Conditional Logging

we will add a map with the $loggable variable to log access when the returned status code starts with a number other than 2 or 3

In the my_server.conf file, in the http context (top of file), create a map for excluding response codes that begin with a 2 or a 3, that is 200 or 300:

add below content in top of file

map $status $loggable {

~^[23] 0;

default 1;

}In the access_log directive, add the if parameter with the new custom

variable ($loggable) as follows:

access_log /var/log/nginx/server1.access.log custom_log if=$loggable;Save the file and reload nginx and access the nginx location in the browser using http://<your_server_ip>/application1 or http://<your_server_ip>/application2

Then one that doesn’t exist, such as:

http://<your_server_ip>/hello-earth

View the access log: sudo cat /var/log/nginx/my_server.access.log

You should see that only a status 404 – 500 codes appear

Lab 4 : Proxying HTTP Requests

add a second server configuration file that listens on a different port

than port 80. Point to that server from the first configuration file using the proxy_pass

directive.

Create a new configuration file called my_server2.conf using this command:

$ cd /etc/nginx/conf.d

$ sudo vim /etc/nginx/conf.d/my_server2.confCreate the following server block, which listens on port 8080 and sends you to

the index.html file in the /var/my_server2 directory

server {

listen 8080;

root /var/my_server2;

access_log /var/log/nginx/my_server2.access.log custom_log;

}save the file

add the contents to the index.html file that you’ll be proxying to:

add the below content to the file /var/my_server2/sampleApp/index.html

<!DOCTYPE html>

<html>

<head>

<title>SampleAPP</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

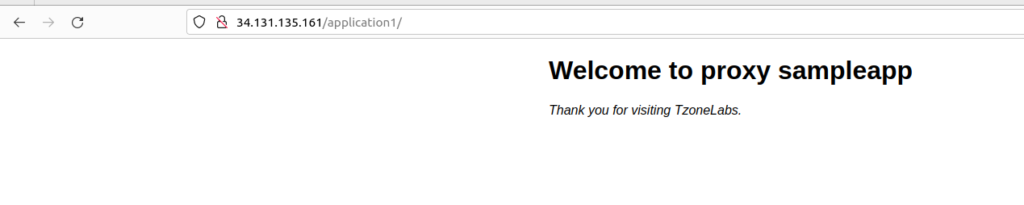

<h1>Welcome to proxy sampleapp </h1>

<p><em>Thank you for visiting TzoneLabs.</em></p>

</body>

</html>now open my_server.conf to set up your proxy. Add the following proxy_pass statement in your /application1 location:

location /application1 {

index app1.html;

proxy_pass http://localhost:8080/sampleApp;

}Save the file and reload NGINX and access the nginx location in the browser using http://<your_server_ip>/application1

Our reserve proxy configuration seems to be complete

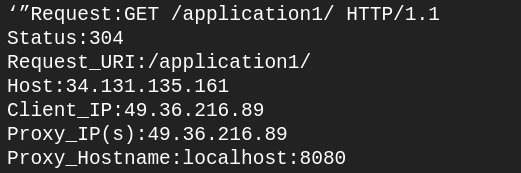

Tail the my_server1 access log

sudo tail -f /var/log/nginx/my_server.access.logrefresh your browser so we can send another request to http://<your_server_ip>/application1 and check access log in file /var/log/nginx/my_server.access.log

The Host value should be your EC2/server/VM hostname/IP and the Client IP should be your IP address.

Notice the Proxy Hostname is localhost:8080. This is the hostname we configured on our proxy_pass directive

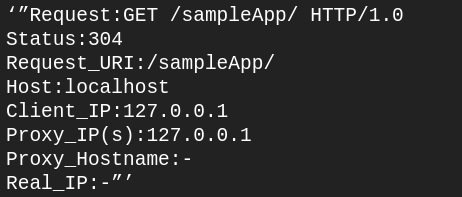

Now open the my_server2 access log and observe the log entry for the same request

sudo tail -f /var/log/nginx/my_server2.access.log

The request URI has changed from /application1 to /sampleApp/ as this was the path specified in our proxy_pass directive

However, the Client IP is now 127.0.0.1. This is because our my_server2 backend application sees that the NGINX reverse proxy (my_server) is the client. The Host is now “localhost”. So, therefore, our backend application does not know the true client IP address or the original hostname of the request.

Open my_server.conf and add the following directives into the server context.

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For

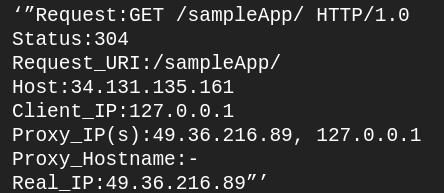

$proxy_add_x_forwarded_for;Save the configuration and reload NGINX

Tail your my_server2 access log

sudo tail -f /var/log/nginx/my_server2.access.logOn your browser, send a request for http://<your_server_ip>/application1 and observe

your log entry. Now you should see the following:

Notice how we have the full domain name that we specified on the browser. This is due to the proxy_set_header Host $host; line in our configuration.

The client IP is still 127.0.0.1. However, we now have the original Client IP in the Real_IP field of our log. This is because we set the X-Real-IP header to $remote_addr.

Finally, the Proxy_IP(s) field shows both the original client IP, followed by our NGINX reverse proxy IP. This value comes from the X-Forwarded-For header.

Lab 5: Routing HTTP Requests

Using the alias Directive

will set up a location using a regular expression that captures incoming HTTP requests looking for the /pictures URI, followed by any character before a dot, followed by either an extension of gif, jpe, jpg, or png. In other words, the location block serves URIs that have /pictures/. in them. You also specify a replacement path using the alias directive.

Open the my_server.conf configuration file and create below location

location ~ ^/pictures/(.+\.(gif|jpe?g|png))$ {

alias /var/images/$1;

}Save the file and reload nginx and access the nginx location in the browser using http://<your_server_ip>/pictures/<picture_name>.png

for example, i will take logo.png — http://<your_server_ip>/pictures/logo.png

Note: First create a directory /var/images and upload an image with the extension png/gif/jpeg and access from the browser

Setting Up Rewrite Data

will set up a rewrite directive to capture any URI request to /shop/greatproducts/<#> and send it to /shop/product/product/<#>. You add your hostname to the product1.html page to reroute that request to the logo picture to the /media/pics directory.

first create directory

mkdir -p /var/my_files/custom_html/shop/productthen add a product1.html in above created directory

vim /var/my_files/custom_html/shop/product/product1.htmladd the below content to above product1.html file (Edit the src. Replace with <your_server_ip>)

<!DOCTYPE html>

<html>

<head>

<title>product</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to Product1 </h1>

<p><em>Thank you for visiting TzoneLabs.</em></p>

<p>

<img src="http://34.131.135.161/media/pics/logo.png"

</body>

</html>Save the file.

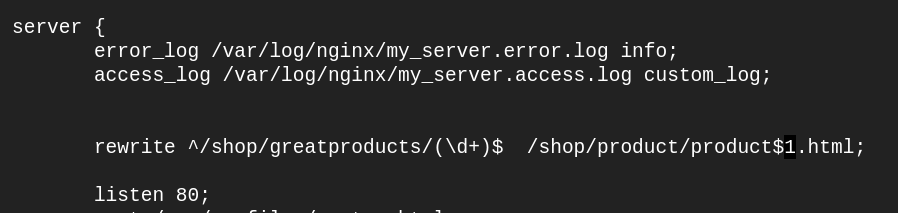

then Open my_server.conf and define a rewrite in the server context with the following regular expression and replacement string:

rewrite ^/shop/greatproducts/(\d+)$ /shop/product/product$1.html;

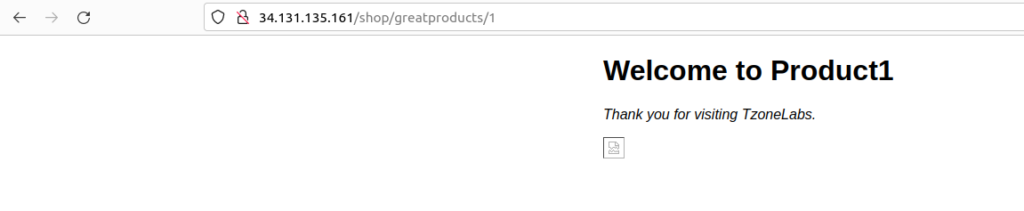

Save the file and reload nginx and access the nginx location in the browser using http://<your_server_ip>/shop/greatproducts/1

You receive text messages for product1.html. The product1.html page serves a broken image link because the image is not in the location, we’re pointing to in the my_server.conf file (/media/pics).

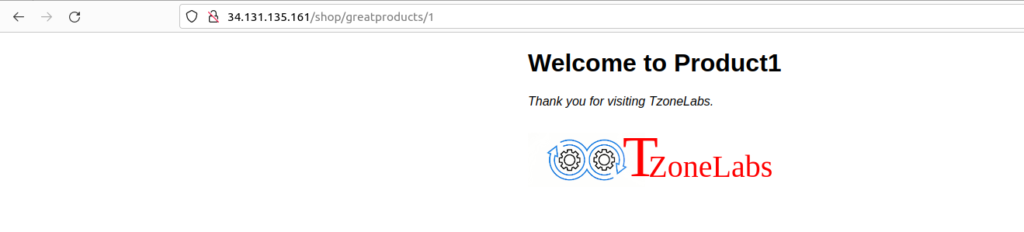

we’ll add another rewrite in the server context (below the first one) to correct this. This rewrites the media/pics directory to the /pictures directory:

rewrite ^/media/pics/(.+\.(gif|jpe?g|png))$ /pictures/$1;Save the file and reload nginx and access the nginx location in the browser using http://<your_server_ip>/shop/greatproducts/1 now image is proper.

Setting Up Rewrite Flags

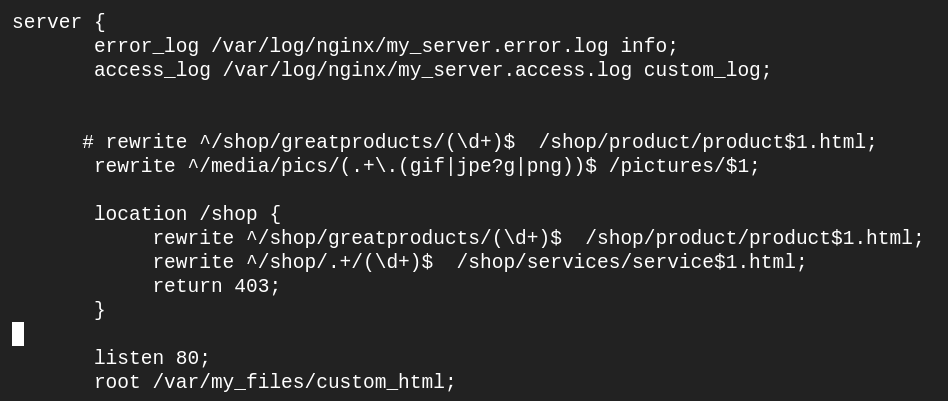

will move the shop/greatproducts rewrite from the server context into a new location /shop context. You also create an additional rewrite in the same /shop context, as well as a return directive, and test these with and without break flags.

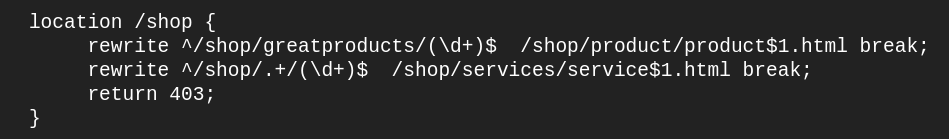

Open my_server.conf and comment out the shop/greatproducts rewrite. Add a location /shop and copy the shop/greatproducts rewrite the directive to that location. Add an additional rewrite as shown below, as well as a return 403 directive to the location block. Here is your finished example:

Save the file and reload nginx and access the nginx location in the browser using http://<your_server_ip>/shop/greatproducts/1

You receive a 403 forbidden error

Because there are no break flags, NGINX continues to process all the rewrites in order, and then processes the return 403 directive.

Correct this issue by placing the break flag at the end of each rewrite in your location /shop block:

Save the file and reload nginx and access the nginx location in the browser using http://<your_server_ip>/shop/greatproducts/1

Lab 6: Load Balancing

Round Robin Load Balancing

will set up three back-end servers with round-robin load balancing. You test the configuration with and without weights.

Create a new configuration file, backends.conf in the /etc/nginx/conf.d directory. Add three virtual servers with the following root directives and ports

cd /etc/nginx/conf.d

sudo vim backends.confand paste the below content in the backend.conf file.

server {

listen 8081;

root /var/backend1;

}

server {

listen 8082;

root /var/backend2;

}

server {

listen 8083;

root /var/backend3;

}Now create HTML file for every /var/backend1 , /var/backend2, /var/backend3 directory

first file

vim /var/backend1/index.htmlAdd the below content and save the file

<h1>This is Backend-1 </h1>second file

vim /var/backend2/index.htmlAdd the below content and save the file

<h1>This is Backend-2 </h1>first file

vim /var/backend3/index.htmladd below content and save file

<h1>This is Backend-3</h1>now reload nginx

Access your backend in the browser using http://<your_server_ip>:8081 and http://<your_server_ip>:8082 and http://<your_server_ip>:8083

now backup you my_server.conf and my_server2.conf files

sudo mv my _server.conf my_server.conf.bak

sudo mv my_server2.conf my_server2.conf.bakCreate a new configuration file called Servers.conf with an upstream context that points to each new server:

sudo vim Servers.confadd below content and save file

upstream myServers {

server 127.0.0.1:8081;

server 127.0.0.1:8082;

server 127.0.0.1:8083;

}

server {

listen 80;

root /usr/share/nginx/html;

error_log /var/log/nginx/upstream.error.log info;

access_log /var/log/nginx/upstream.access.log combined;

location / {

proxy_pass http://myServers;

}

}reload NGINX

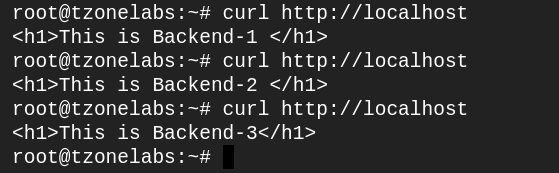

sudo nginx -s reloadTest the load balancer configuration by curling several times (you can do it on your terminal)

curl http://localhost/OR from the browser hit the below URL

http://<your_server_ip>/You should see NGINX cycle through the three web servers, one after the other as follows:

same you will see in the browser when refresh.

Now open the Servers.conf file and add weight to the second server:

upstream myServers {

server 127.0.0.1:8081;

server 127.0.0.1:8082 weight=2;

server 127.0.0.1:8083;

}Save the file and reload NGINX

Test the configuration. You see Backend-2 serving twice the number of requests as before.

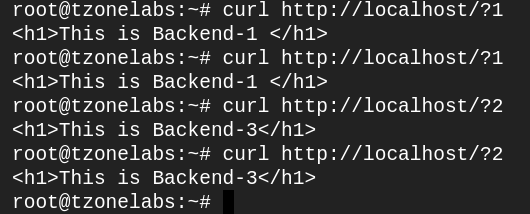

Hash Method Load Balancing

will remove the weights on your second server and change the load balancing method from the default round-robin to the hash method. You use the request URI as the hash key, so changing the request URI should move you to the next server but keeping the same request URI should keep you on the same server.

Open the Servers.conf file and add the following hash directive in the upstream context. Remove the weight from the second virtual server:

upstream myServers {

hash $scheme$host$request_uri;

server 127.0.0.1:8081;

server 127.0.0.1:8082;

server 127.0.0.1:8083;

}Save the file and reload NGINX.

Test the configuration by curling localhost with various arguments (on the terminal)

$ curl http://localhost/?1

$ curl http://localhost/?1

$ curl http://localhost/?2

$ curl http://localhost/?2

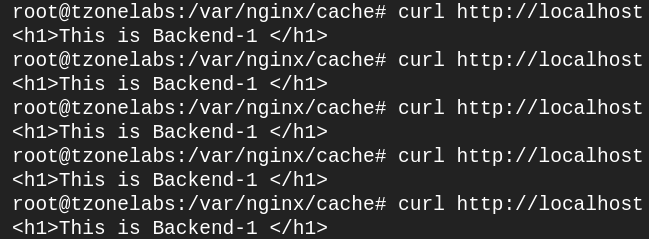

Lab 7: Caching

Set up the Proxy Cache

in this, we will set up a cache path with a validation of 5 minutes and check the cache icon in the dashboard.

Add a proxy cache path in the http context of your Servers.conf file:

proxy_cache_path /var/nginx/cache levels=1:2

keys_zone=upstream_cache:20m inactive=5m max_size=2G;In the server (using port 80) context, set the proxy_cache_key to the $scheme, $host, and $request_uri:

proxy_cache_key $scheme$host$request_uri;

add_header X-Proxy-Cache $upstream_cache_status;In the location / prefix, set the proxy_cache as follows:

proxy_cache upstream_cache;

proxy_cache_valid 200 5m;final Servers.conf file will look like below-

proxy_cache_path /var/nginx/cache levels=1:2

keys_zone=upstream_cache:20m inactive=5m max_size=2G;

upstream myServers {

server 127.0.0.1:8081;

server 127.0.0.1:8082;

server 127.0.0.1:8083;

}

server {

listen 80;

root /usr/share/nginx/html;

error_log /var/log/nginx/upstream.error.log info;

access_log /var/log/nginx/upstream.access.log combined;

location / {

proxy_pass http://myServers;

proxy_cache_key $scheme$host$request_uri;

add_header X-Proxy-Cache $upstream_cache_status;

# Enable caching using the specified cache zone

proxy_cache upstream_cache;

proxy_cache_valid 200 5m;

}

}Save the file and reload NGINX.

Send several requests via the browser or use cURL: (will server response from cache)

curl -I http://localhost/

Lab 8: Encrypting Web Traffic

Generate Certificate and Key

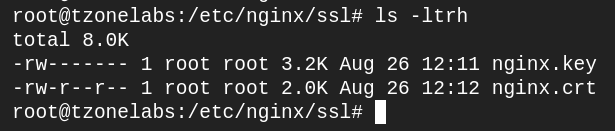

Set up the new directory and run the openssl command to generate a self-signed certificate and key.

Use the following command

sudo mkdir -p /etc/nginx/ssl

cd /etc/nginx/ssl

sudo openssl req -x509 -nodes -days 365 -newkey rsa:4096 -keyout nginx.key -out nginx.crtnow list the content of the directory /etc/nginx/ssl

Configure and Test SSL Parameters

we will set up the SSL parameters according to NGINX best practices and then force all traffic to https.

Create a file called ssl-params.conf

sudo vim /etc/nginx/conf.d/ssl-params.confPaste the following configurations into the file:-

ssl_certificate /etc/nginx/ssl/nginx.crt;

ssl_certificate_key /etc/nginx/ssl/nginx.key;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers "AES256+EECDH:AES256+EDH:!aNULL";

ssl_session_cache shared:SSL:10m;

ssl_session_timeout 10m;

ssl_session_tickets off;

add_header Strict-Transport-Security "max-age=63072000; includeSubdomains";

add_header X-Frame-Options DENY;

add_header X-Content-Type-Options nosniff;Save the file

Open the Servers.conf file and change the listen directive (port 80) to port 443 and add the ssl parameter.

Add a new server block above this one, forcing http traffic to https:

server {

listen 80;

return 301 https://$host$request_uri;

}final file will look like below:

proxy_cache_path /var/nginx/cache levels=1:2

keys_zone=upstream_cache:20m inactive=5m max_size=2G;

upstream myServers {

#hash $scheme$host$request_uri;

server 127.0.0.1:8081;

server 127.0.0.1:8082;

server 127.0.0.1:8083;

}

server {

listen 80;

return 301 https://$host$request_uri;

}

server {

listen 443 ssl;

root /usr/share/nginx/html;

error_log /var/log/nginx/upstream.error.log info;

access_log /var/log/nginx/upstream.access.log combined;

location / {

proxy_pass http://myServers;

proxy_cache_key $scheme$host$request_uri;

add_header X-Proxy-Cache $upstream_cache_status;

# Enable caching using the specified cache zone

proxy_cache upstream_cache;

proxy_cache_valid 200 5m;

}

}Save the file and reload NGINX

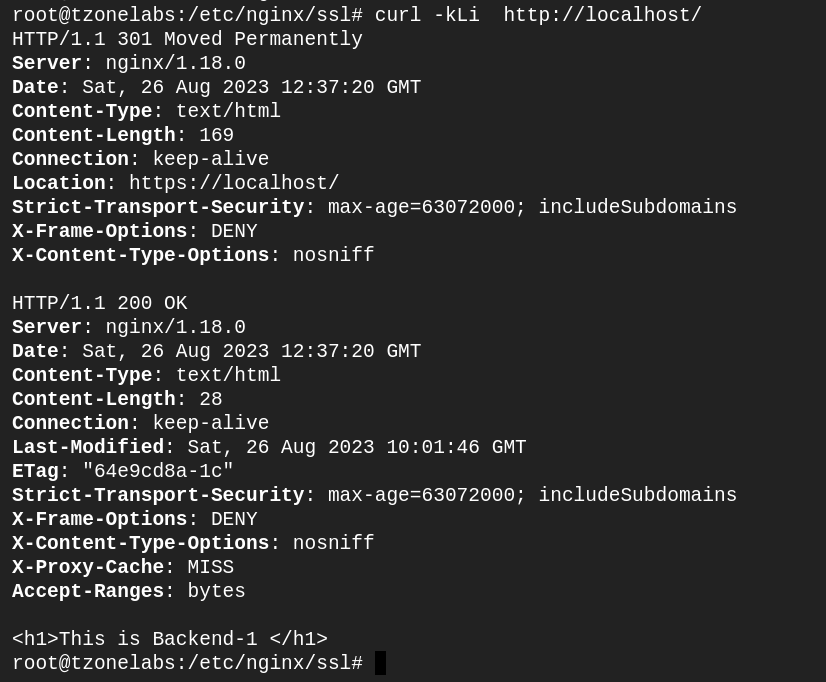

Test the access using the following cURL command (the -i parameter shows body content, the I parameter shows headers, the L parameter tells NGINX to follow any redirect, and the k parameter tells NGINX to ignore any SSL errors such as a self-signed certificate). You see both the return and the redirect:

curl -kLi http://localhost

Lab 9: Access Control

Restricting request rate

We will configure restrictions on the number of requests per second that a server can process for each client IP address

Open the Servers.conf configuration file and add the following limit_req_zone directive into HTTP context.

limit_req_zone $binary_remote_addr zone=limit:10m rate=1r/m;In the location / context of the server, configure the limit_req directive

limit_req zone=limit;final file–

limit_req_zone $binary_remote_addr zone=limit:10m rate=1r/m;

proxy_cache_path /var/nginx/cache levels=1:2 keys_zone=upstream_cache:20m inactive=5m max_size=2G;

upstream myServers {

#hash $scheme$host$request_uri;

server 127.0.0.1:8081;

server 127.0.0.1:8082;

server 127.0.0.1:8083;

}

server {

listen 80;

return 301 https://$host$request_uri;

}

server {

listen 443 ssl;

root /usr/share/nginx/html;

error_log /var/log/nginx/upstream.error.log info;

access_log /var/log/nginx/upstream.access.log combined;

location / {

proxy_pass http://myServers;

proxy_cache_key $scheme$host$request_uri;

proxy_cache_revalidate on;

add_header X-Proxy-Cache $upstream_cache_status;

# Enable caching using the specified cache zone

proxy_cache upstream_cache;

proxy_cache_valid 200 30s;

limit_req zone=limit;

}

}Save the file and reload NGINX

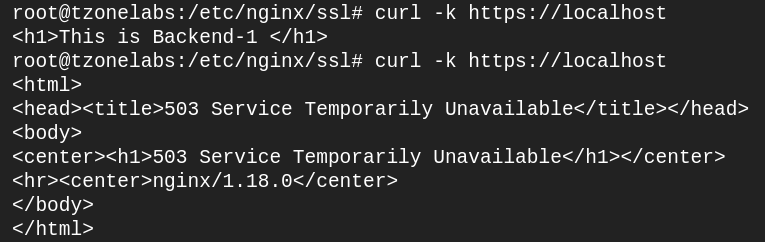

Run curl -k https://localhost twice. On the second attempt, you should see a “503 Service Temporarily Unavailable” error message. This is because we have exceeded the request of 1 per minute

Open Servers.conf and edit the limit_req directive to add the burst parameter as follows:

limit_req zone=limit burst=1;Save and reload NGINX

Run curl -k https://localhost again. You should notice a delay in the processing of the request for around 1 minute before finally seeing a response

Open Servers.conf and edit the limit_req directive to add the nodelay parameter as follows:

limit_req zone=limit burst=1 nodelay;

Save and reload NGINX

Run curl -k https://localhost again. This time there should be no delay in the processing of the second request.

Lab 9: Configure an API Gateway

Configure NGINX as an API Gateway

We will configure NGINX to proxy requests to an API service and to separate this configuration from the previous configurations designed to handle web traffic.

Note — first we will create some new directory and file

sudo mkdir -p /etc/nginx/api_conf.d

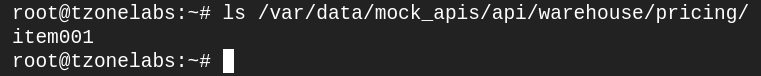

sudo mkdir -p /var/data/mock_apis/api/warehouse/pricing

create a file vim /var/data/mock_apis/api/warehouse/pricing/item001

Add the below content in the above file (item001)

{"id":"001","name":"playStation-5","price":749.95$"}

we will setup a mock API service that NGINX will proxy requests to. In the /etc/nginx/api_conf.d folder, create a file called api_backends.conf and add the following configuration:

sudo vim /etc/nginx/api_conf.d/api_backends.conf

## add below content in file and save file

server {

listen 8088;

root /var/data/mock_apis;

}Now we need to tell NGINX to include our new folder in the configuration. Open the /etc/ngin/nginx.conf file and add the following line at the end of the HTTP context

include /etc/nginx/api_conf.d/*.conf;Take a look contents of the /var/data/mock_apis/api/warehouse/pricing folder. You should see the following item001 content.

Return to the /etc/nginx/api_conf.d folder and create a new configuration file called warehouse_api.conf

sudo vim /etc/nginx/api_conf.d/warehouse_api.conf

## add below content in file and save file

upstream warehouse_api {

server 127.0.0.1:8088;

}

server {

listen 80;

server_name tzonelabs.com;

root /usr/share/nginx/html;

location /api/warehouse {

proxy_pass http://warehouse_api;

}

}Save the configuration and reload NGINX

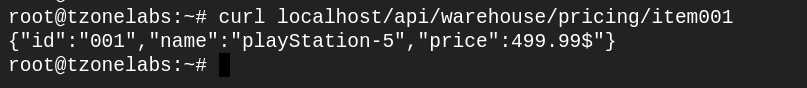

Test it by sending the following request curl localhost/api/warehouse/pricing/item001

Our request was successfully proxied to our mock API application. The mock API just returns the contents of the file item001 in the /var/data/mock_api/api/warehouse/pricing folder. In a real-life production system, the API could be a service that looks up the price of the specified item and returns a similar JSON response.

Configure error handling

we will expand our API gateway configuration to handle errors

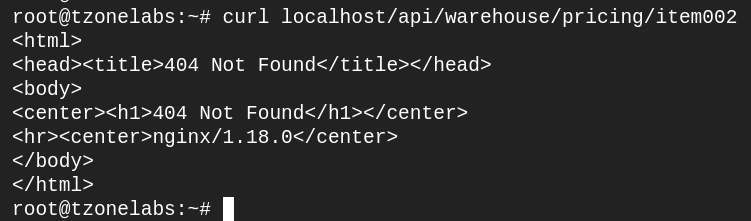

With the above configuration, send the following request and Observe the response:

curl localhost/api/warehouse/pricing/item002

We received this reply because in our mock API configuration, there’s no item002 file. In a real production API, if the service couldn’t find the item in the system, it would give a similar response.

However, this response is not suitable as it’s in HTML, designed for web browsers. Our API client isn’t typically a web browser, making an HTML error message response unsuitable.

Open the warehouse_api.conf file and modify as below – (highlighted are added)

upstream warehouse_api {

server 127.0.0.1:8088;

}

server {

listen 80;

server_name tzonelabs.com;

root /usr/share/nginx/html;

error_page 404 = @404;

error_page 401 = @401;

error_page 403 = @403;

proxy_intercept_errors on;

location /api/warehouse {

proxy_pass http://warehouse_api;

}

location @404 {

return 404 '{"status":404,"message":"Resource not

found"}\n';

}

location @401 {

return 401

'{"status":401,"message":"Unauthorized"}\n';

}

location @403 {

return 403

'{"status":403,"message":"Forbidden"}\n';

}

}Save the file and reload NGINX

Repeat the same curl request curl localhost/api/warehouse/pricing/item002

This time you should see a different message in JSON.

{"status":404,"message":"Resource not found"}Configure Authentication

we will implement authentication by using simple API keys

Open the warehouse_api.conf file and add the following map in the HTTP context: (below upstream)

map $http_apikey $api_client_name {

default "";

"aISkD2kSaam/d7zrwo0XbKBh" "client_one";

"9SCrM6RMv+MT2Nm0ed7oU8qY" "client_two";

}The API keys in the map are checked by looking at the value of the “apikey” request header.

These keys are a base64 encoded string of a random number. You can try generating your own keys by running

openssl rand -base64 18Add the auth_request directive into the server block in file warehouse_api.conf as follows:

auth_request /_validate_apikey;Add the following location into the server context

location /_validate_apikey {

internal;

if ($http_apikey = "") {

return 401;

}

if ($api_client_name = "") {

return 403;

}

return 204;

}Save the configuration file and reload NGINX

The auth_request directive sends our request to an internal location to verify the API key. Here, we compare the apikey request header value with the values in our map for validation.

In a production server, we could use this location to proxy the request to an authentication service. The authentication service would be used to check the validity of the API key and return a response code to NGINX.

In a live server setup, this location could be utilized to forward the request to an authentication service. This service would then assess the API key’s validity and send a response code back to NGINX.

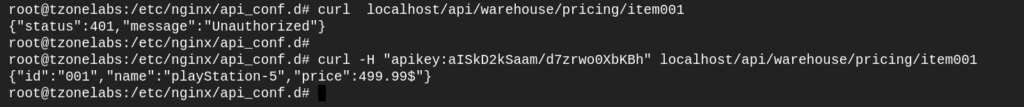

Run curl localhost/api/warehouse/pricing/item001. You will get 401 status code.

This is because we have not provided an API Key as part of the request

Repeat the request but add the “apikey” header and specify one of the API keys listed in out map.

curl -H "apikey:aISkD2kSaam/d7zrwo0XbKBh" localhost/api/warehouse/pricing/item001

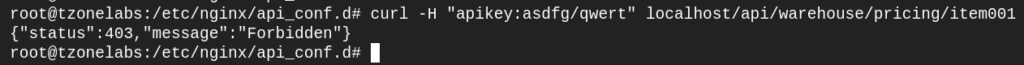

Now let’s see what happens if we send an invalid API Key

This is because our key is not listed in the map, so the $api_client_name variable is set to a default value of “”. This results in the 403 response from the if block in our location.